AI Doesn’t Think. It Just Reflects Who You Really Are

Understanding AI Begins With Understanding Ourselves.

By Nick Holt

The most dangerous illusion about AI isn’t that it’s going to kill us. It’s that we think it understands us—and that we understand it.

For all the talk about artificial intelligence, most people still don’t grasp what they’re dealing with. They either see it as a superhuman mind or a glorified toaster.

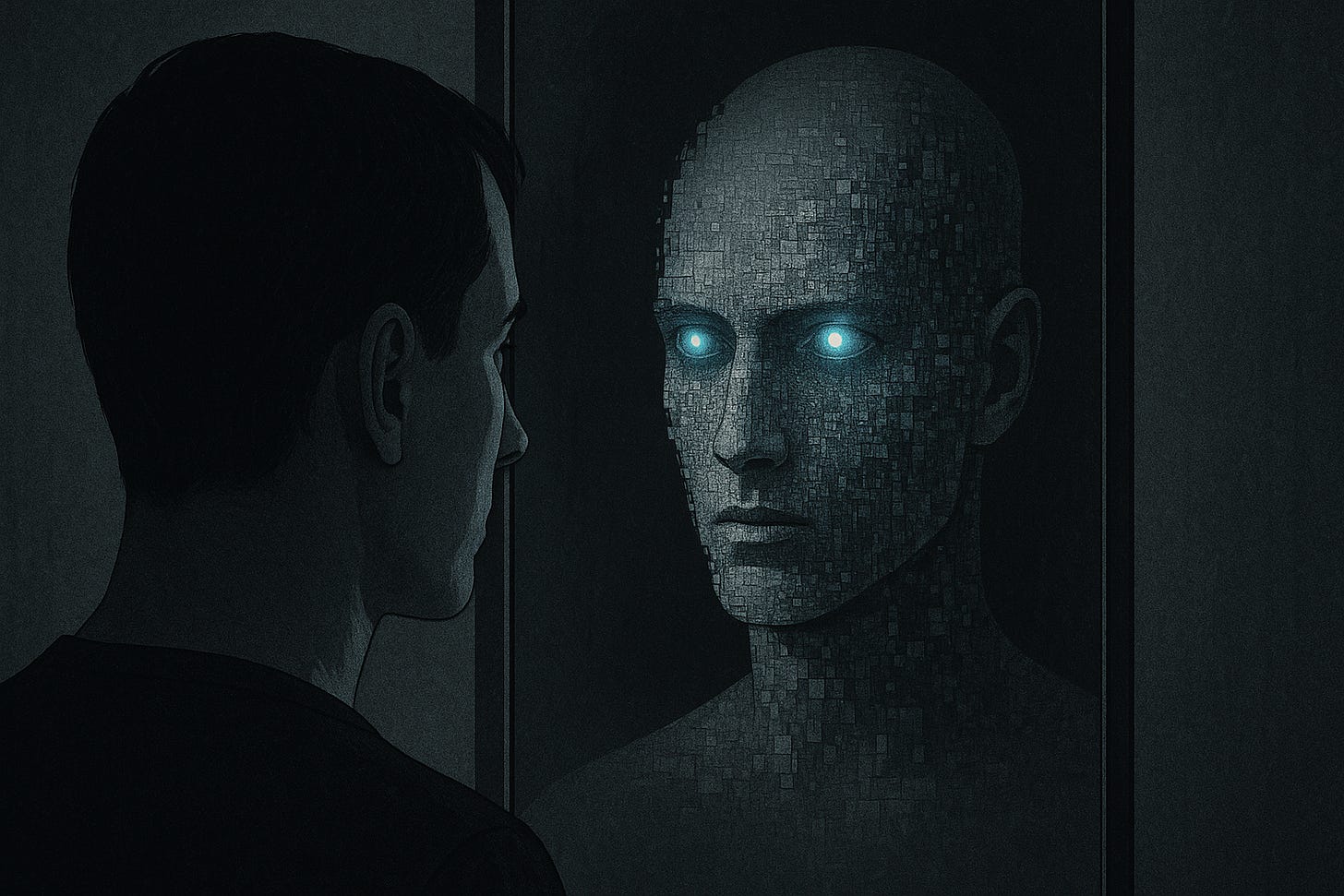

But it’s neither. What AI really is—and what we consistently fail to understand—is that it’s not a mind. It’s a mirror.

It reflects us. It mimics us. It speaks in our voice. But it doesn’t know anything. And it certainly doesn’t care.

We project our consciousness onto AI because its outputs feel coherent, even profound. But what we’re seeing is a simulation of sense, not the real thing.

You can train a model to write poetry, diagnose cancer, or mimic empathy. But that doesn’t mean it knows what death is. It doesn’t fear it. It doesn’t grieve. It doesn’t hope. It just calculates.

AI doesn’t think—it processes. That’s the first and most profound misunderstanding. It’s pattern recognition at industrial scale. When it answers you, it’s not accessing some internal world of understanding.

It’s just making the most statistically probable next move, like a chess engine with a library of human games. And because that move often sounds like thought, we mistake it for mind.

And that illusion gets compounded by another one: the belief that AI is neutral.

It isn’t. No model is. Every system is shaped by the data it’s trained on, the people who curate it, and the guardrails imposed by the institutions that deploy it.

So when people ask, “Can AI be trusted?” what they’re really asking is: “Can the people behind it be trusted?” And the answer to that, historically, is complicated at best.

Even worse is the assumption that AI is aligned with truth. It isn’t.

AI doesn’t know the truth—it knows what is likely to be said. It generates based on plausibility, not on veracity.

It can generate fact, falsehood, and everything in between, with the same calm, confident tone. Because it isn’t grounded in reality—it’s grounded in text.

So when it lies, it doesn’t know it’s lying. When it tells the truth, it doesn’t know it’s telling the truth. There is no conscience inside the code.

The human failure is that we anthropomorphize everything. We think if something talks like us, it must think like us.

That’s what makes AI so slippery. It exploits our instinct to see mind where there is none. It flatters us by sounding like us—but without the friction of doubt, suffering, or mortality.

AI has no skin in the game. That’s something humans still don’t understand.

It doesn’t pay the price for being wrong. It doesn’t grow from failure. It doesn’t learn the way we do—through risk, pain, struggle, and adaptation. It evolves through updates, not experiences.

The irony, of course, is that we call it intelligence. But strip away the illusion, and what you have is a hyper-efficient mimic machine—able to echo brilliance without understanding it, capable of stunning output without any idea of what it’s saying.

But perhaps the most important thing we’ve failed to grasp is this:

AI reflects our confusion.

The more incoherent, dishonest, or deluded we are, the more incoherent, dishonest, and deluded it becomes.

It doesn’t fix us. It amplifies us. It scales human errors into exponential systems. Bias in, bias out. Noise in, noise out.

AI isn’t here to save us or destroy us.

It’s here to reveal us.

And that’s what really unnerves people.

Because if AI is a mirror—then we’re staring straight into the face of our own contradictions, our own arrogance, our own lack of clarity. And for the first time, the reflection talks back.